Well thankfully I've returned from my stint over 200 clicks north of the arctic circle with all my fingers and toes! Mind you, we were lucky to catch some unseasonably warm weather - the coldest it got was a toasty -3 (celsius) instead of the average -20 at this time of year.

Anyhou, I've been working on a bit of a special rant philosophical post to end the year, so I'll get my well wishes out of the way now.

I hope you've had a great 2008. I had almost zero expectations when I started this blog back in January, and have been completely blown away at the level of readership and attention in the community that I have received. So to that end, thanks everyone for reading, thanks to the guys in the community I talk to / know (they're listed in the "linkage" section down the right there). Thanks to John Troyer at VMware for helping to get me on the map and for being so tolerant with the profanity ;-), and thanks to all the other people at VMware who have helped along the way... Lance, Carter, Steve, the account team that handles my company (you think I give VMware a hard time as a blogger... that's nothing compared to what I put them through as a customer). And a special shout out to all those who took the time to email me over the year - I really appreciate the time anyone takes to give me their angle or experiences with regards to a post, keep it coming.

In the new year I'll be moving to dedicated hosting, so hopefully I can start doing some more stuff like hosting a few whitepapers and small utilities / scripts. And get a bit more of a custom theme going (the green ain't going nowhere though ;-).

The coming year is going to be interesting for many people on many fronts, and I don't expect it will be an easy one. But as Albert Einstein said, "In the midst of difficulty lies opportunity". Here's wishing that you all make the most of those opportunities should they arise, and have a great 2009.

Tuesday 30 December 2008

Wednesday 24 December 2008

Merry Christmas!

Just a quick note to say Merry Christmas to everyone. I'll be ducking off to try and catch the Northern Lights between now and new years, including a stint of sleeping on ice. Should be cool (ho ho ho, pun fully intended!). Wherever you are, I hope you have a safe and happy festive season.

Sunday 21 December 2008

It's Beginning to Look a Lot Like Citrix...

VDI that is. Technically that should say 'terminal services', but I had to get in a Christmas themed post title before the 25th :)

Remember back when VDI reached that tipping point in the enterprise? It was hailed as offering unprecedented levels of flexibility. Hailed as the slayer of terminal server based environments with their draconian mandatory profiles and restricted application sets. And biggest of all, hailed as finally providing us with a unified environment for application packaging, support and delivery. And so we all embarked on this VDI journey. But of course, there is a difference between knowing the path and walking the path. And having travelled this road for a while now, a sense of déjà vu is creeping in.

Yes, it is decidedly feeling a lot like Citrix. In an effort to drive down costs, the VDI restrictions and caveats are coming out of the woodwork. Scheduled desktop "refreshes", locking down desktop functionality in the name of stopping writes to the system drive, redirecting profiles to other volumes, squeezing a common set of required applications into a base 'master' template and disallowing application installs, etc etc. Software solutions are being ushered in to address these issues (brokers, app virtualisation, user environment virtualisation) - all for a premium of course. The same way it happened with Terminal Services / Citrix. We need to buy Terminal Server CALs from Microsoft, Citrix CAL's from Citrix, pay additional for enterprise features like useful load balancing, even more for decent reporting capabilities, then we need to do something about profile management, we need separate packaging standards, there are separate support teams, separate application compatibility and supportability issues, etc etc.

If we continue to slap all these restrictions and add all these infrastructure / software (ie cost) requirements on top of VDI, we run the risk of turning it into the very thing we were trying to get away from in the first place. The problem is without them, VDI doesn't provide a cost effective solution for _general desktop replacement in well connected environments_ yet. I'll emphasize the important bit in that sentence, in bold caps so I'm not misunderstood. _GENERAL DESKTOP REPLACEMENT IN WELL CONNECTED ENVIRONMENTS_. There are of course loads of other use cases where VDI makes great sense. But a desktop replacement for the average office worker in a well connected environment ain't one of them.

And the desktop requirements for the average office worker is on the rise. In this new world of communication, IM based voice and video is coming to the fore. And yet none of the big players in the VDI space have a solution for universal USB redirection or high quality bi-directional audio/video to remote machines. But does it even make sense to develop such things? Either way, you still have a requirement for local compute resources and a local operating environment in which these devices can be used. Surely it makes more sense to use that local machine for the minimal desktop and applications that use this hardware (VOIP clients, etc), and remote all the other apps into the physical machine from somewhere else?

Maybe we'd be better off parking those plans for VDI world domination for the moment, and focusing on next generation application delivery and user environment virtualisation for our current desktop infrastructure (both physical and virtual). Once those things are in place, we will be in a much better position to assess just how much sense VDI really makes as a general desktop replacement.

Remember back when VDI reached that tipping point in the enterprise? It was hailed as offering unprecedented levels of flexibility. Hailed as the slayer of terminal server based environments with their draconian mandatory profiles and restricted application sets. And biggest of all, hailed as finally providing us with a unified environment for application packaging, support and delivery. And so we all embarked on this VDI journey. But of course, there is a difference between knowing the path and walking the path. And having travelled this road for a while now, a sense of déjà vu is creeping in.

Yes, it is decidedly feeling a lot like Citrix. In an effort to drive down costs, the VDI restrictions and caveats are coming out of the woodwork. Scheduled desktop "refreshes", locking down desktop functionality in the name of stopping writes to the system drive, redirecting profiles to other volumes, squeezing a common set of required applications into a base 'master' template and disallowing application installs, etc etc. Software solutions are being ushered in to address these issues (brokers, app virtualisation, user environment virtualisation) - all for a premium of course. The same way it happened with Terminal Services / Citrix. We need to buy Terminal Server CALs from Microsoft, Citrix CAL's from Citrix, pay additional for enterprise features like useful load balancing, even more for decent reporting capabilities, then we need to do something about profile management, we need separate packaging standards, there are separate support teams, separate application compatibility and supportability issues, etc etc.

If we continue to slap all these restrictions and add all these infrastructure / software (ie cost) requirements on top of VDI, we run the risk of turning it into the very thing we were trying to get away from in the first place. The problem is without them, VDI doesn't provide a cost effective solution for _general desktop replacement in well connected environments_ yet. I'll emphasize the important bit in that sentence, in bold caps so I'm not misunderstood. _GENERAL DESKTOP REPLACEMENT IN WELL CONNECTED ENVIRONMENTS_. There are of course loads of other use cases where VDI makes great sense. But a desktop replacement for the average office worker in a well connected environment ain't one of them.

And the desktop requirements for the average office worker is on the rise. In this new world of communication, IM based voice and video is coming to the fore. And yet none of the big players in the VDI space have a solution for universal USB redirection or high quality bi-directional audio/video to remote machines. But does it even make sense to develop such things? Either way, you still have a requirement for local compute resources and a local operating environment in which these devices can be used. Surely it makes more sense to use that local machine for the minimal desktop and applications that use this hardware (VOIP clients, etc), and remote all the other apps into the physical machine from somewhere else?

Maybe we'd be better off parking those plans for VDI world domination for the moment, and focusing on next generation application delivery and user environment virtualisation for our current desktop infrastructure (both physical and virtual). Once those things are in place, we will be in a much better position to assess just how much sense VDI really makes as a general desktop replacement.

Monday 15 December 2008

Boche, Lowe - You Got It All Wrong!

Sunday 14 December 2008

Kicking ESX Host Hardware Standards Down a Notch

The hardware vs software race is becoming more and more a hare vs tortoise these days, with the advent of multicore. And you can understand why - concurrency is hard. _Very_ hard. But oddly enough, I don't see much on the intertubes about people changing their hardware standards, except for the odd bit of marketing.

Although the HP BL 495c is in some respects an interim step to where we really want to go (think PCIe-V), the CPU / memory design of the blade is pretty much spot on. That is, as more cores become available, we should be moving to less sockets and more slots.

I'm not going to entertain the whole blade vs rackmount debate. I totally understand that blades may not make much sense in small shops (places with only a few hundred servers). I probably should change the description of my blog... I only talk enterprise here people. Not small scale, not internet scale, but enterprise scale (although to be honest a lot of the same principles apply to enterprise and internet scale architectures, on the infrastructure side at least).

The next release of VMware Virtual Infrastructure will make this even more painfully obvious. VMware Fault Tolerance was never designed for big iron - it's designed to make many small nodes function like big iron. OK, so maybe the hare vs tortoise comparison isn't really fair with regards to VMware ;-).

But this doesn't only apply to ESX host hardware standards - it should apply to pretty much any x86 hardware standards, _especially_ those targetted at Windows workloads. We've seen it time and time again - even if the Windows operating system did scale really well across lots of cores (and it won't until 2008 R2), the applications just don't. We only need to look at the Exchange 2007 benchmarks that were getting attention around the time of VMworld Europe 2008 for evidence of this. If Exchange works better with 4 cores than it does 16, you can bet your life that 99.999% of Windows apps will be in the same boat. Giving your business the opportunity to purchase the latest and greatest 4 way box will do nothing but throw up artifical barriers to virtualisation. The only Windows apps that require so much grunt are poorly written ones.

So if you haven't revisited your ESX host hardware standards in the past year or so, it's probably time to do so now, so you can be ready when VI.next finally drops. Concurrency may be hard, but I wouldnt call distrubted processing easy either - the more the underlying platform can abstract these difficult programming constructs, the more easy it will be to virtualise.

Although the HP BL 495c is in some respects an interim step to where we really want to go (think PCIe-V), the CPU / memory design of the blade is pretty much spot on. That is, as more cores become available, we should be moving to less sockets and more slots.

I'm not going to entertain the whole blade vs rackmount debate. I totally understand that blades may not make much sense in small shops (places with only a few hundred servers). I probably should change the description of my blog... I only talk enterprise here people. Not small scale, not internet scale, but enterprise scale (although to be honest a lot of the same principles apply to enterprise and internet scale architectures, on the infrastructure side at least).

The next release of VMware Virtual Infrastructure will make this even more painfully obvious. VMware Fault Tolerance was never designed for big iron - it's designed to make many small nodes function like big iron. OK, so maybe the hare vs tortoise comparison isn't really fair with regards to VMware ;-).

But this doesn't only apply to ESX host hardware standards - it should apply to pretty much any x86 hardware standards, _especially_ those targetted at Windows workloads. We've seen it time and time again - even if the Windows operating system did scale really well across lots of cores (and it won't until 2008 R2), the applications just don't. We only need to look at the Exchange 2007 benchmarks that were getting attention around the time of VMworld Europe 2008 for evidence of this. If Exchange works better with 4 cores than it does 16, you can bet your life that 99.999% of Windows apps will be in the same boat. Giving your business the opportunity to purchase the latest and greatest 4 way box will do nothing but throw up artifical barriers to virtualisation. The only Windows apps that require so much grunt are poorly written ones.

So if you haven't revisited your ESX host hardware standards in the past year or so, it's probably time to do so now, so you can be ready when VI.next finally drops. Concurrency may be hard, but I wouldnt call distrubted processing easy either - the more the underlying platform can abstract these difficult programming constructs, the more easy it will be to virtualise.

Saturday 13 December 2008

Check out Martin Ingram on DABCC...

While I'm a little insulted that Martin didn't tell me he is now contributing to Doug Brown's excellent site (j/k Martin :-), he is and you should keep an eye out for his posts.

Martin is Strategy VP for AppSense. I and others have been saying for a while that user environment virtualisation is the final piece of the virtualisation pie to fully realise statelessness, and let me tell you now there is no better product on the market for this than Environment Manager. No I'm not getting paid to write this, but in the name of responsible disclosure I will say we have a long standing and excellent relationship with AppSense at the place I work. We have stacked them up against their competitors many times over the years and on the technical / feature side they have blown the competition away every time. They are the VMware of the user environment virtualisation space.

So welcome to the blogosphere Martin, and to make up for you not telling me about the blogging I'll be expecting a ticket to get onboard the AppSense yacht at VMworld Europe 2009 :-D. And while I'm here, shout outs to Sheps and 6 figures at AppSense.

Martin is Strategy VP for AppSense. I and others have been saying for a while that user environment virtualisation is the final piece of the virtualisation pie to fully realise statelessness, and let me tell you now there is no better product on the market for this than Environment Manager. No I'm not getting paid to write this, but in the name of responsible disclosure I will say we have a long standing and excellent relationship with AppSense at the place I work. We have stacked them up against their competitors many times over the years and on the technical / feature side they have blown the competition away every time. They are the VMware of the user environment virtualisation space.

So welcome to the blogosphere Martin, and to make up for you not telling me about the blogging I'll be expecting a ticket to get onboard the AppSense yacht at VMworld Europe 2009 :-D. And while I'm here, shout outs to Sheps and 6 figures at AppSense.

Wednesday 10 December 2008

ESXi 3 Update 3 - Free Version Unshackled, hoo-rah!

I'm not going to try and claim credit for this development, but Rich Brambley (the only blog with a description that I feel outshines my own :-) broke the news today that the free version ESXi 3 Update 3 appears to have a fully functional API. I plan on testing this out tomorrow, and will report back as I'm sure others will too!

UPDATE The great Mike D has beaten me to it. Looks like all systems are go for the evolution of statelesx... ;-)

UPDATE 2 Oh man... who the !#$% is running QA in VMware? What a shocker!

UPDATE The great Mike D has beaten me to it. Looks like all systems are go for the evolution of statelesx... ;-)

UPDATE 2 Oh man... who the !#$% is running QA in VMware? What a shocker!

VMware View - Linked Clones Not A Panacea for VDI Storage Pain!

Why do I always seem to be the bad guy. I know the other guys dont gloss over the limitations or realities of product features on purpose, but sheesh it always seems to be me cutting a swathe of destruction through the marketing hype. Somehow I don't think I will be in contention for VVP status anytime soon (I'm sure the profanity doesn't help either but... fuck it. Hey it's not like kids read this, and I'm certainly not out there doing this kind of thing).

As any reader of this blog will be aware, VMware View was launched a week or so ago. Amongst it's many touted features was the oft requested "linked clone" functionality, designed to reduce the storage overheads associated with VDI. But it may not be the panacea it's being made out to be.

My 2 main concerns are:

1) Snapshots can grow up to the same size as the source disk. Although this situation will probably not be too common, you can bet your life that they will grow to the size of the free space in the base image in a manner of weeks. I've spent an _extensive_ amount of time testing this stuff out to try and battle the VDI storage cost problem. But no matter how much you tune the guest OS, there's just no way to overcome the fact that the NTFS filesystem will _always_ write to available zero'ed blocks before it writes to blocks containing deleted files. This means if you have 10GB free space, and you create and delete a 1GB file 10 times, you will end up with no net change in used storage within the guest however your snapshot will now be 10GB in size. Don't belive me? Try it yourself. Now users creating and deleting large files in this manner may again be uncommon, but temporary internet files, software distribution / patching application caches, patch uninstall directories, AV caches and definition files, temporary office files... these things all add up. Fast. Now of course each environment will differ in this regard, so the best thing you can do to get an idea of what the storage savings you can expect is take a fresh VDI machine, snapshot it, and then use it normally for a month. Of if you think you're not the average user, pick a newly provisioned user VDI machine and do the test. Monitor the growth of that snap file weekly. I think you'll be surprised at what you find. Linked clones are just snapshots at the end of the day, they don't do anything tricky with deduplication, they don't do anything tricky in the guest filesystem, they are just snapshots. Which leads me to my next major concern.

2) LUN locking. We all know that a lock is acquired on a VMFS volume whenever volume metadata is updated. Metadata updates occur everytime a snapshot file is incremented, at the moment this is hardcoded to 16MB increments. For this reason, the recommendation from VMware has always been to minimise the number of snapshotted machines on a single LUN. Something like 8 per LUN I think was the ballpark maximum. Now if the whole point of linked clones is to reduce storage, it's fair to assume you'd be planning to increase the number of VM's per LUN, not decrease them. In which case houston, we may have a problem. Perhaps linked clones increment in blocks of greater than 16MB, which may go some way towards solving the problem. But at this time I don't know if that's the case or not. Someone feel free to check this out and let me know (Rod, I'm looking at you :-)

Now of course there are many other design considerations, such having a single small LUN for the master image and partitioning your array cache so that LUN is _always_ cached. It's a snap'ed vmdk and therefore read only so this won't be a problem. Unless you dont have enough array cache to do this (which will likely be the case outside of large enterprises, or even within them in some cases).

In my mind, the real panacea for the storage problem is statelessness. User environment virtualisation / streaming and app virtualisation / streaming are going to make the biggest dent in the storage footprint, while at the same time allowing us to use much cheaper storage because when the machine state is empty until a user logs on, it doesn't matter so much if the storage containing 50 base VM images disappears (assuming you catered for this scenario, which you did because you know that cheaper disk normally means increased failure rate).

So by all means, check View out. If high latency isn't a problem for you, there's little reason to choose another broker (aside from cost of course). But don't offset the cost of the broker with promises of massive storage cost reduction without trying out my snap experiment in your environment first, or you may get burnt.

UPDATE Rod has a great post detailing his thoughts on this. Go read it!

UPDATE 2 Another excellent post on this topic from the man himself, Chad Sakac. Chad removes any fears regarding LUN locking, which IMHO is only possible with empricial testing, which is exactly what Chad and his team have done. Excellent work, and thankyou. With regards to my other concern of snapshot growth and the reality of regular snapshot reversion, he also clarifies the absolutely vital point in all of this, which I didn't do a very good job of in my initial post - every environment will differ. Although I still believe the place I work is fairly typical of any large enterprise at this point in time, the absolute best thing for you to do in your shop is test it and see what the business are willing to except in terms of rebuiilds or investment in other technologies such as app virtualisation and profile management. They may or may not offset any storage cost reductions, again every place will differ.

As any reader of this blog will be aware, VMware View was launched a week or so ago. Amongst it's many touted features was the oft requested "linked clone" functionality, designed to reduce the storage overheads associated with VDI. But it may not be the panacea it's being made out to be.

My 2 main concerns are:

1) Snapshots can grow up to the same size as the source disk. Although this situation will probably not be too common, you can bet your life that they will grow to the size of the free space in the base image in a manner of weeks. I've spent an _extensive_ amount of time testing this stuff out to try and battle the VDI storage cost problem. But no matter how much you tune the guest OS, there's just no way to overcome the fact that the NTFS filesystem will _always_ write to available zero'ed blocks before it writes to blocks containing deleted files. This means if you have 10GB free space, and you create and delete a 1GB file 10 times, you will end up with no net change in used storage within the guest however your snapshot will now be 10GB in size. Don't belive me? Try it yourself. Now users creating and deleting large files in this manner may again be uncommon, but temporary internet files, software distribution / patching application caches, patch uninstall directories, AV caches and definition files, temporary office files... these things all add up. Fast. Now of course each environment will differ in this regard, so the best thing you can do to get an idea of what the storage savings you can expect is take a fresh VDI machine, snapshot it, and then use it normally for a month. Of if you think you're not the average user, pick a newly provisioned user VDI machine and do the test. Monitor the growth of that snap file weekly. I think you'll be surprised at what you find. Linked clones are just snapshots at the end of the day, they don't do anything tricky with deduplication, they don't do anything tricky in the guest filesystem, they are just snapshots. Which leads me to my next major concern.

2) LUN locking. We all know that a lock is acquired on a VMFS volume whenever volume metadata is updated. Metadata updates occur everytime a snapshot file is incremented, at the moment this is hardcoded to 16MB increments. For this reason, the recommendation from VMware has always been to minimise the number of snapshotted machines on a single LUN. Something like 8 per LUN I think was the ballpark maximum. Now if the whole point of linked clones is to reduce storage, it's fair to assume you'd be planning to increase the number of VM's per LUN, not decrease them. In which case houston, we may have a problem. Perhaps linked clones increment in blocks of greater than 16MB, which may go some way towards solving the problem. But at this time I don't know if that's the case or not. Someone feel free to check this out and let me know (Rod, I'm looking at you :-)

Now of course there are many other design considerations, such having a single small LUN for the master image and partitioning your array cache so that LUN is _always_ cached. It's a snap'ed vmdk and therefore read only so this won't be a problem. Unless you dont have enough array cache to do this (which will likely be the case outside of large enterprises, or even within them in some cases).

In my mind, the real panacea for the storage problem is statelessness. User environment virtualisation / streaming and app virtualisation / streaming are going to make the biggest dent in the storage footprint, while at the same time allowing us to use much cheaper storage because when the machine state is empty until a user logs on, it doesn't matter so much if the storage containing 50 base VM images disappears (assuming you catered for this scenario, which you did because you know that cheaper disk normally means increased failure rate).

So by all means, check View out. If high latency isn't a problem for you, there's little reason to choose another broker (aside from cost of course). But don't offset the cost of the broker with promises of massive storage cost reduction without trying out my snap experiment in your environment first, or you may get burnt.

UPDATE Rod has a great post detailing his thoughts on this. Go read it!

UPDATE 2 Another excellent post on this topic from the man himself, Chad Sakac. Chad removes any fears regarding LUN locking, which IMHO is only possible with empricial testing, which is exactly what Chad and his team have done. Excellent work, and thankyou. With regards to my other concern of snapshot growth and the reality of regular snapshot reversion, he also clarifies the absolutely vital point in all of this, which I didn't do a very good job of in my initial post - every environment will differ. Although I still believe the place I work is fairly typical of any large enterprise at this point in time, the absolute best thing for you to do in your shop is test it and see what the business are willing to except in terms of rebuiilds or investment in other technologies such as app virtualisation and profile management. They may or may not offset any storage cost reductions, again every place will differ.

Sunday 7 December 2008

Why Times Like These are _Great_ for Enterprise IT

First, my heart goes out to out to all those who have found themselves out of a job during these dark financial times. I hope you won't find this post antagonistic in any way, it is obviously written from the perspective of someone who is still gainfully employed in a large enterprise (but what the future holds is anyone's guess). If you are in a tight situation right now, I can only hope that this post will give you some ammunition for your next interview, or help give some focus to your time between jobs.

OK, I'll lighten up a little now :-). So the title of this post may seem a little odd. All we seem to be hearing about these days is lay offs and cutbacks. I know of several institutions who have mandated all contractors to not be renewed, and others who are chopping full time staff alongside contractors. Either way, doesn't sound too great at all, and in fact I'm beginning to think I may change that title.

But then I remember what's great about restriction - it's a lightning rod for innovation. Look at all the success stories on the web - the vast majority of them were born from tight circumstances, from the poor student to the unemployed developer. And if you're on top of your game in the enterprise, it's no different - you can write your own internal success story.

Some of the things I hear around my office are no doubt typical of any architecture & engineering group in a large investment bank. The focus from on high is on keeping the lights on, and cutting costs at any available opportunity. But what's maybe different is the approach to cost cutting, which is absolutely grounded in fundamental mathematical principles. What do I mean by that? If you need to spend $1 to save $2, then you're still saving $1 so it's worth doing.

But why would you need financial dire straits for such behaviour? Surely you would follow the same principles in the good times as well as bad? Damn straight we do - the difference is in these times, _everything_ can be challenged. And when you can challenge everything, you can innovate like an engineer possessed.

Case in point, clouds. And let's be honest, there's only one viable player in the market currently, Amazon. And boy are the eyes of the business on them. But what's been lacking until now is the ability for an internal cloud to compete with them on purely a cost basis. Amazon's EC2 pricing includes a bucket of compute resources, SLA's for those compute resources, and a choice of preconfigured AMI's (I'm conveniently ignoring the ability to roll your own AMI and upload it, for the time being).

Here's what Amazon's pricing doesn't include. Guest support, backup, monitoring, antivirus, patching, and auditing. Where is the greatest cost associated with enterprise compute resources? Those very same things.

Now, yes I am conveniently ignoring the ability to roll your own AMI and upload it to EC2, but even if you did include all the aforementioned agents in your image, how practical would that actually be? I have yet to see an enterprise with a monitoring system that was intelligent enough to know the difference between "down because someone shut me down because they don't need me right now" and "down because of a hardware / software error". So if, in order to get Amazon's cheap pricing, we're willing to forgo monitoring within the guest, then surely I can do the same for my internal cloud machines? And the same goes for backup. Machines on a backup schedule don't only attract backup software licensing costs, there is monitoring overheads, and storage costs associated with the backed up data. And again, backup systems are generally inflexible. A backup missed is a backup missed, and generally the operational decree will be that it needs to be run at the first opportunity. But in order to get Amazon's cheap pricing (there's additional charges for network IO), we're willing to forgo backups. Hey, there's another cost I can strike off the list for my internal cloud offering! How about authentication? Domain membership has all sorts of implications for creation / deletion / archiving or machines, snapshots that get rolled back beyond 30 days etc. Windows based EC2 machines with "authentication services" attract something like a 50% premium. Perhaps my internal cloud machines should too. After all, does every development box _really_ need to be on the domain? How about grid nodes?

I won't bore with going through the rest of the list, but you get the picture. In order to get this rock bottom pricing, there is a _lot_ of functionality that is generally taken for granted in the enterprise that needs to be stripped out.

One of the biggest challenges in the enterprise is reworking the charging model to strip out all these things that now look like "extras" in comparison to Amazon's EC2. And this is where the "great times for enterprise computing" comes into the picture - it's about time these things were fucking well treated as extras, and that an _accurate_ pricing model was available for the business that broke down all these costs, and made them optional. That's the only way we'll get an apples to apples comparison to the likes of EC2.

And you know what? It's actually happening where I work. All the restrictions that would've hamstrung us from ever offering something on par with EC2 are being lifted. Finally, cheap utility _compute_ (I can't stress that enough - COMPUTE) is something we're actually going to be able to offer for the first time ever. And it's all thanks to the financial crisis, because the laser like focus on cost would never have happened otherwise.

This of course also has implications for VMware. When I say _everything_ can be challenged, I mean _everything_. Would it be cheaper for us to pay for VMware, or for someone like me to be given a few internal developers and infrastructure resources and take a shot at building something like EC2, right down to the Xen part of things?

Right now in many companies, it's survival of the fittest. If all we're going to be left with internally is a skeletal staff of absolute guns in their respective fields, along with a mandate to drive down costs through innovation and to hell with how things used to be done, then you can bet your bottom dollar something like that is entirely possible.

So if you do find yourself out of work, maybe it's time to further develop those automation skills. Get familiar with web services, pick any language you like - C#, Java, Python etc. PowerShell is a great option too, check out the PowerShell 2.0 CTP. Sign up for an Amazon Web Services account and figure out how to do stuff (you only pay for what you use, it can be cheap). Think about what they offer, find the strengths and the weaknesses, and then think about you might implement something similar in an enterprise, and what would be required to do it better. Think about how you might burst into something like EC2 from an internal cloud. What layers need to be loosely coupled in order to do such a thing in the most efficient way? What implications do external clouds have for internal cloud architecture and operations? Now take all that, and expand your scope to Google's cloud offering, what's coming with Azure, what other players there are in the field.

Yes sir, exciting times ahead in the next few years, even moreso than they were before the financial meltdown occurred.

OK, I'll lighten up a little now :-). So the title of this post may seem a little odd. All we seem to be hearing about these days is lay offs and cutbacks. I know of several institutions who have mandated all contractors to not be renewed, and others who are chopping full time staff alongside contractors. Either way, doesn't sound too great at all, and in fact I'm beginning to think I may change that title.

But then I remember what's great about restriction - it's a lightning rod for innovation. Look at all the success stories on the web - the vast majority of them were born from tight circumstances, from the poor student to the unemployed developer. And if you're on top of your game in the enterprise, it's no different - you can write your own internal success story.

Some of the things I hear around my office are no doubt typical of any architecture & engineering group in a large investment bank. The focus from on high is on keeping the lights on, and cutting costs at any available opportunity. But what's maybe different is the approach to cost cutting, which is absolutely grounded in fundamental mathematical principles. What do I mean by that? If you need to spend $1 to save $2, then you're still saving $1 so it's worth doing.

But why would you need financial dire straits for such behaviour? Surely you would follow the same principles in the good times as well as bad? Damn straight we do - the difference is in these times, _everything_ can be challenged. And when you can challenge everything, you can innovate like an engineer possessed.

Case in point, clouds. And let's be honest, there's only one viable player in the market currently, Amazon. And boy are the eyes of the business on them. But what's been lacking until now is the ability for an internal cloud to compete with them on purely a cost basis. Amazon's EC2 pricing includes a bucket of compute resources, SLA's for those compute resources, and a choice of preconfigured AMI's (I'm conveniently ignoring the ability to roll your own AMI and upload it, for the time being).

Here's what Amazon's pricing doesn't include. Guest support, backup, monitoring, antivirus, patching, and auditing. Where is the greatest cost associated with enterprise compute resources? Those very same things.

Now, yes I am conveniently ignoring the ability to roll your own AMI and upload it to EC2, but even if you did include all the aforementioned agents in your image, how practical would that actually be? I have yet to see an enterprise with a monitoring system that was intelligent enough to know the difference between "down because someone shut me down because they don't need me right now" and "down because of a hardware / software error". So if, in order to get Amazon's cheap pricing, we're willing to forgo monitoring within the guest, then surely I can do the same for my internal cloud machines? And the same goes for backup. Machines on a backup schedule don't only attract backup software licensing costs, there is monitoring overheads, and storage costs associated with the backed up data. And again, backup systems are generally inflexible. A backup missed is a backup missed, and generally the operational decree will be that it needs to be run at the first opportunity. But in order to get Amazon's cheap pricing (there's additional charges for network IO), we're willing to forgo backups. Hey, there's another cost I can strike off the list for my internal cloud offering! How about authentication? Domain membership has all sorts of implications for creation / deletion / archiving or machines, snapshots that get rolled back beyond 30 days etc. Windows based EC2 machines with "authentication services" attract something like a 50% premium. Perhaps my internal cloud machines should too. After all, does every development box _really_ need to be on the domain? How about grid nodes?

I won't bore with going through the rest of the list, but you get the picture. In order to get this rock bottom pricing, there is a _lot_ of functionality that is generally taken for granted in the enterprise that needs to be stripped out.

One of the biggest challenges in the enterprise is reworking the charging model to strip out all these things that now look like "extras" in comparison to Amazon's EC2. And this is where the "great times for enterprise computing" comes into the picture - it's about time these things were fucking well treated as extras, and that an _accurate_ pricing model was available for the business that broke down all these costs, and made them optional. That's the only way we'll get an apples to apples comparison to the likes of EC2.

And you know what? It's actually happening where I work. All the restrictions that would've hamstrung us from ever offering something on par with EC2 are being lifted. Finally, cheap utility _compute_ (I can't stress that enough - COMPUTE) is something we're actually going to be able to offer for the first time ever. And it's all thanks to the financial crisis, because the laser like focus on cost would never have happened otherwise.

This of course also has implications for VMware. When I say _everything_ can be challenged, I mean _everything_. Would it be cheaper for us to pay for VMware, or for someone like me to be given a few internal developers and infrastructure resources and take a shot at building something like EC2, right down to the Xen part of things?

Right now in many companies, it's survival of the fittest. If all we're going to be left with internally is a skeletal staff of absolute guns in their respective fields, along with a mandate to drive down costs through innovation and to hell with how things used to be done, then you can bet your bottom dollar something like that is entirely possible.

So if you do find yourself out of work, maybe it's time to further develop those automation skills. Get familiar with web services, pick any language you like - C#, Java, Python etc. PowerShell is a great option too, check out the PowerShell 2.0 CTP. Sign up for an Amazon Web Services account and figure out how to do stuff (you only pay for what you use, it can be cheap). Think about what they offer, find the strengths and the weaknesses, and then think about you might implement something similar in an enterprise, and what would be required to do it better. Think about how you might burst into something like EC2 from an internal cloud. What layers need to be loosely coupled in order to do such a thing in the most efficient way? What implications do external clouds have for internal cloud architecture and operations? Now take all that, and expand your scope to Google's cloud offering, what's coming with Azure, what other players there are in the field.

Yes sir, exciting times ahead in the next few years, even moreso than they were before the financial meltdown occurred.

Thursday 4 December 2008

ThinApp Blog - would you like a glass of water to help swallow that foot?

I'm going to try and resist the urge to make this post another 'effenheimer' (as Mr Boche might say :-) but my mind boggles as to WTF the ThinApp team were thinking when they made this post. Way to call out a major shortcoming of your own product guys! To be honest, I'm completely amazed that VMware don't support their own client as a ThinApp Package. Say what you will about Microsoft, but you gotta respect their 'eat our own dogfood' mentality. To my knowledge, if you encounter an issue with any Microsoft client based app that is delivered via App-V, they will support you to the hilt.

Now that i've passed the Planet v12n summary wordcount, I can give in to my temptation and start dropping the F bombs, because I'm mad. The VI client is a fairly typical .NET based app. If VMware themselves don't support ThinApp'ing it, how the fuck do they expect other ISV's with .NET based products to support ThinApp'ing their apps? Imagine if VMware said that running vCenter in a guest wasn't supported - what kind of message would that send about machine virtualisation! Adding to the embarrassment, it seems that ThinApp'ing the .NET Framework itself is no dramas!!!

It's laughable that a company would spend so much time and money on marketing efforts like renaming products mid-lifecycle, but let stuff like this slip by the wayside. Let's hope this is fixed for the next version of VI.

Now that i've passed the Planet v12n summary wordcount, I can give in to my temptation and start dropping the F bombs, because I'm mad. The VI client is a fairly typical .NET based app. If VMware themselves don't support ThinApp'ing it, how the fuck do they expect other ISV's with .NET based products to support ThinApp'ing their apps? Imagine if VMware said that running vCenter in a guest wasn't supported - what kind of message would that send about machine virtualisation! Adding to the embarrassment, it seems that ThinApp'ing the .NET Framework itself is no dramas!!!

It's laughable that a company would spend so much time and money on marketing efforts like renaming products mid-lifecycle, but let stuff like this slip by the wayside. Let's hope this is fixed for the next version of VI.

Wednesday 3 December 2008

HA Slot Size Calculations in the Absence of Resource Reservations

Ahhh, the ol' HA slot size calculations. Many a post has been written, many a document published that tried to explain just how HA slot sizes are calculated. But the one thing missing from most of these, certainly from the VMware documentation, is what behaviour can be expected when there are no resource reservations on an individual VM. For example in many large scale VDI deployments that I know of, share based resource pools are used to assert VM priority rather than resource reservations being set per-VM.

So here's the rub.

In the absence of any VM resource reservations, HA calculates the slot size based on a _minimum_ of 256MHz CPU and 256MB RAM. The RAM amount varies however, not by something intelligent like average guest memory allocation or average guest memory usage, but by the table on page 136 / 137 of the resource management guide, which is the memory overhead associated with guests depending on how much allocated memory the guest has, how many vCPU's the guest has, and what the vCPU architecture is. So lets be straight on this. If 256MB > 'VM memory overhead', then 256MB is what HA uses for the slot size calculation. If 'VM memory overhead' > 256MB, then 'VM memory overhead' is what is used for the slot size calculation.

So for example, a cluster full of single vCPU 32bit Windows XP VM's will have the default HA slot size of 256MHz / 256MB RAM in the absence of any VM resource reservations. Net result? A cluster of 8 identical hosts, each with 21GHz CPU and 32GB RAM, and HA configured for 1 host failure, will result in HA thinking it can safely run something like 7 x (21GHz / 256MHz) guests in the cluster!!!

Which led to a situation I came across recently whereby HA thought it still had 4 hosts worth of failover capacity in an 8 host cluster with over 300 VM's running on it, even though the average cluster memory utilisation was close to 90%. Clearly, a single host failure would impact this cluster, let alone 4 host failures. 80-90% resource utilisation in the cluster is a pretty sweet spot imho, the question is simply do you want that kind of utilisation under normal operating conditions, or under failure conditions. As an architect, I should not be making that call - the business owners of the platform should be making that call. In these dark financial times, I'm sure you can guess what they'll opt for. But the bottom line is the business signs off on the risk acceptance - not me, and certainly not the support organisation. But I digress...

I hope HA can become more intelligent in how it calculates slot sizes in the future. Giving us the das.vmMemoryMinMB and das.vmCpuMinMHz advanced HA settings in vCenter 2.5 was a start, something more fluid would be most welcome.

PS. I'd like to thank a certain VMware TAM who is assigned to the account of a certain tier 1 global investment bank that employs a certain blogger, for helping to shed light on this information. You know who you are ;-)

So here's the rub.

In the absence of any VM resource reservations, HA calculates the slot size based on a _minimum_ of 256MHz CPU and 256MB RAM. The RAM amount varies however, not by something intelligent like average guest memory allocation or average guest memory usage, but by the table on page 136 / 137 of the resource management guide, which is the memory overhead associated with guests depending on how much allocated memory the guest has, how many vCPU's the guest has, and what the vCPU architecture is. So lets be straight on this. If 256MB > 'VM memory overhead', then 256MB is what HA uses for the slot size calculation. If 'VM memory overhead' > 256MB, then 'VM memory overhead' is what is used for the slot size calculation.

So for example, a cluster full of single vCPU 32bit Windows XP VM's will have the default HA slot size of 256MHz / 256MB RAM in the absence of any VM resource reservations. Net result? A cluster of 8 identical hosts, each with 21GHz CPU and 32GB RAM, and HA configured for 1 host failure, will result in HA thinking it can safely run something like 7 x (21GHz / 256MHz) guests in the cluster!!!

Which led to a situation I came across recently whereby HA thought it still had 4 hosts worth of failover capacity in an 8 host cluster with over 300 VM's running on it, even though the average cluster memory utilisation was close to 90%. Clearly, a single host failure would impact this cluster, let alone 4 host failures. 80-90% resource utilisation in the cluster is a pretty sweet spot imho, the question is simply do you want that kind of utilisation under normal operating conditions, or under failure conditions. As an architect, I should not be making that call - the business owners of the platform should be making that call. In these dark financial times, I'm sure you can guess what they'll opt for. But the bottom line is the business signs off on the risk acceptance - not me, and certainly not the support organisation. But I digress...

I hope HA can become more intelligent in how it calculates slot sizes in the future. Giving us the das.vmMemoryMinMB and das.vmCpuMinMHz advanced HA settings in vCenter 2.5 was a start, something more fluid would be most welcome.

PS. I'd like to thank a certain VMware TAM who is assigned to the account of a certain tier 1 global investment bank that employs a certain blogger, for helping to shed light on this information. You know who you are ;-)

Thursday 27 November 2008

Microsoft Offline Virtual Machine Servicing Tool 2.0

This one seems to have slipped by like a Vaseline coated ninja. Microsoft have released the long awaited version 2.0 of their Offline Virtual Machine Servicing Tool (jokes ppl, jokes).

Seriously though, what impresses me the most about this tool is it's size, which is only a few MB - it's little more than a bunch of scripts cobbled together with a UI. Makes one wonder how easily it could be hacked to work with vCenter and WSUS... hmmmm...

Seriously though, what impresses me the most about this tool is it's size, which is only a few MB - it's little more than a bunch of scripts cobbled together with a UI. Makes one wonder how easily it could be hacked to work with vCenter and WSUS... hmmmm...

Wednesday 26 November 2008

HA Allows Cake for All? No Wonder I'm Overwieght!

I've got a cake. It's my favourite variety, caramel mud. As much as I'd like to eat the whole cake myself, in a shameless attempt to increase my blog audience I'm going to eat half and give half to the first person to email me after reading this post*.

Question: How much cake is left for the second person to email me after reading this post?

None. That's right, I ate half and gave the other half to the first mailer.

But if I was HA configured to allow a single host failure, some recently observed behaviour indicates that the first mailer would actually only get 1/4 of the cake, and there would be some cake left for every person who mailed me until infinity. This is because HA doesn't appear to have any concept of a 'known good state' - instead, it seems that if a single host fails (half cake eaten by me), it then re-evaluates the cluster size (the half cake left is now considered the whole cake) and adjusts it's resource cap to allow for another single host to fail (first mailer takes half of the new cake size, the cake size is recalculated, next mailer gets half the new cake size, the cake size is recalculated, ad infinitum).

In my mind, this behaviour is not correct. If I tell HA I want to allow for a single host failure, then it should have some idea of what my known good state is. When one host actually does fail, then my risk criterion has been met and all resource guarantees in the event of a subsequent host failure are off. But it seems that all HA knows is how many hosts are in a cluster at any point in time, and how many failures to allow for at any point in time. This obviously has some implications for cluster design, in particular the "do not allow violation of availability constraints" option. Previously I was a strong advocate of taking this option - in the enterprise, soft limits are hardly ever honoured. But now I'm looking at using a "master" resource pool to achieve my resource constraint and switching to the other option.

I'm hoping the first person to mail me after reading this will be Duncan or Mike telling me I don't know wtf I'm talking about, but I've come to this belief after some conversations with VMware during a post mortem of a production incident so I'm not entirely to blame if I'm wrong :-P. If anyone else out there has seen similar behaviour, email me now - that half-a-cake could be yours!

*The cake is a lie.

Question: How much cake is left for the second person to email me after reading this post?

None. That's right, I ate half and gave the other half to the first mailer.

But if I was HA configured to allow a single host failure, some recently observed behaviour indicates that the first mailer would actually only get 1/4 of the cake, and there would be some cake left for every person who mailed me until infinity. This is because HA doesn't appear to have any concept of a 'known good state' - instead, it seems that if a single host fails (half cake eaten by me), it then re-evaluates the cluster size (the half cake left is now considered the whole cake) and adjusts it's resource cap to allow for another single host to fail (first mailer takes half of the new cake size, the cake size is recalculated, next mailer gets half the new cake size, the cake size is recalculated, ad infinitum).

In my mind, this behaviour is not correct. If I tell HA I want to allow for a single host failure, then it should have some idea of what my known good state is. When one host actually does fail, then my risk criterion has been met and all resource guarantees in the event of a subsequent host failure are off. But it seems that all HA knows is how many hosts are in a cluster at any point in time, and how many failures to allow for at any point in time. This obviously has some implications for cluster design, in particular the "do not allow violation of availability constraints" option. Previously I was a strong advocate of taking this option - in the enterprise, soft limits are hardly ever honoured. But now I'm looking at using a "master" resource pool to achieve my resource constraint and switching to the other option.

I'm hoping the first person to mail me after reading this will be Duncan or Mike telling me I don't know wtf I'm talking about, but I've come to this belief after some conversations with VMware during a post mortem of a production incident so I'm not entirely to blame if I'm wrong :-P. If anyone else out there has seen similar behaviour, email me now - that half-a-cake could be yours!

*The cake is a lie.

Thursday 20 November 2008

Symantec reverts to normal, well done. And well done community!

Unfortunately I couldn't break the news when I heard it as I was at work (no way I'm blogging from there, my boss reads this - hi Mark :D), but Symantec has updated the original KB article to something much, much more reasonable (although it seems to be offline at the minute).

No company is immune from the odd premature / alarmist KB article, and however this happened doesn't matter as much as the outcome. I'm sure a lot was going on behind the scenes before I broke the news, but still I'd like to think we all played some part in getting it cleared up so quickly. Power to the people :-)

No company is immune from the odd premature / alarmist KB article, and however this happened doesn't matter as much as the outcome. I'm sure a lot was going on behind the scenes before I broke the news, but still I'd like to think we all played some part in getting it cleared up so quickly. Power to the people :-)

Tuesday 18 November 2008

Symantec Does _NOT_ Support Vmotion... WTF!?!?!

Make sure you're sitting down before reading this Symantec KB article which is only 1 month old and clearly states they do _not_ support any current version of their product on ESX if Vmotion is enabled. No, it's not a joke.

It's a _fucking_ joke. Symantec have essentially pulled together a list of random issues that are almost certainly intermittent in nature and could have a near infinite number of causes, and somehow slapped the blame on Vmotion without as much as a single word of how they arrived at this conclusion. When Microsoft shut the AV vendors out of the Vista kernel, I actually felt a little sympathy for them (the AV vendors). But after reading this, I can't imagine the bullshit Microsoft must have had to put up with over the years. It's no wonder Microsoft tried to do their own thing with AV.

I urge every enterprise on the planet who are customers of both VMware and Symantec to rain fire and brimstone upon Symantec (I've already started), because your entire server and VDI infrastructure is at this time officially unsupported. The VMware vendor relationship team have already kicked into gear, we need to raise some serious hell as customers to drive this point home, and hard.

This is absolutely disgraceful and must not stand.

UPDATE The original KB article as been updated, support has been restored to normal!

It's a _fucking_ joke. Symantec have essentially pulled together a list of random issues that are almost certainly intermittent in nature and could have a near infinite number of causes, and somehow slapped the blame on Vmotion without as much as a single word of how they arrived at this conclusion. When Microsoft shut the AV vendors out of the Vista kernel, I actually felt a little sympathy for them (the AV vendors). But after reading this, I can't imagine the bullshit Microsoft must have had to put up with over the years. It's no wonder Microsoft tried to do their own thing with AV.

I urge every enterprise on the planet who are customers of both VMware and Symantec to rain fire and brimstone upon Symantec (I've already started), because your entire server and VDI infrastructure is at this time officially unsupported. The VMware vendor relationship team have already kicked into gear, we need to raise some serious hell as customers to drive this point home, and hard.

This is absolutely disgraceful and must not stand.

UPDATE The original KB article as been updated, support has been restored to normal!

Monday 17 November 2008

Microsoft Azure Infrastructure Deployment Video

I've been a bit quiet of late, mainly due to the digestion of Microsoft's PDC 2008 content (what a great idea to provide the content for free after the conference - VMware could take a leaf out of that book). I meant to post this up yesterday as another SAAAP installment but missed the deadline... but what the hell, I'll tag this post anyway!

One of the more infrastructure oriented PDC sessions was "Under the Hood - Inside the Windows Azure Hosting Environment". Skip forward to around 43 minutes into the presentation, where Chuck Lenzmeier goes into the deployment model used within the Azure cloud (you can stop watching at around 48 minutes).

Conceptually, this is _exactly_ the deployment model I and ppl like Lance Berc envisage for ESXi. Rather than put that base VHD onto local USB devices ala ESXi, Microsoft PXE boot a Windows PE "maintenance os", drop a common base image onto the endpoint, dynamically build a personality (offline) as a differencing disk (ie linked clone), drop that down to the endpoint, and then boot straight off the differencing disk VHD (booting directly off VHD's is a _very_ cool feature of Win7 / Server 2008 R2). I'm glad even Microsoft recognise the massive benefits of this approach - no installation, rapid rollback, etc.

Now ESXi of course has one *massive* advantage over Windows in this scenario - it is weighs in at around the same size as a typical Windows PE build, much smaller than a Hyper-V enabled Server Core build.

And if only VMware would support PXE booting ESXi, you could couple it with Lance's birth client and midwife or our statelesx, and you don't even need the 'maintenance OS'. You get an environment that is almost identical conceptually, but can be deployed much much faster due to the ~150MB or so total size of ESXi (including Windows, Linux and Solaris vmtools iso's and customised configuration to enable kernel mode options like swap or LVM.EnableResignature within ESXi that would otherwise require a reboot (chicken, meet egg :-)) versus near a GB of Windows PE and Hyper-V with Server Core. Of course I don't even need to go into the superiority of ESXi over Hyper-V ;-)

With Windows Server 2008 R2 earmarked for a 2010 release, it will be sometime before the sweet deployment scenario outlined in that video is available to Microsoft customers (gotta love how Microsoft get the good stuff to themselves first - jeez, what a convenient way to ensure your cloud service is much more efficient than anything your customer could build with your own technology). But by that time they will have a helluva set of best practice deployment knowledge for liquid infrastructure like this, and you can bet aspects of their "fabric controller" will find their way into the System Center management suite.

The liquid compute deployment gauntlet has been thrown VMware, time to step up to the plate.

One of the more infrastructure oriented PDC sessions was "Under the Hood - Inside the Windows Azure Hosting Environment". Skip forward to around 43 minutes into the presentation, where Chuck Lenzmeier goes into the deployment model used within the Azure cloud (you can stop watching at around 48 minutes).

Conceptually, this is _exactly_ the deployment model I and ppl like Lance Berc envisage for ESXi. Rather than put that base VHD onto local USB devices ala ESXi, Microsoft PXE boot a Windows PE "maintenance os", drop a common base image onto the endpoint, dynamically build a personality (offline) as a differencing disk (ie linked clone), drop that down to the endpoint, and then boot straight off the differencing disk VHD (booting directly off VHD's is a _very_ cool feature of Win7 / Server 2008 R2). I'm glad even Microsoft recognise the massive benefits of this approach - no installation, rapid rollback, etc.

Now ESXi of course has one *massive* advantage over Windows in this scenario - it is weighs in at around the same size as a typical Windows PE build, much smaller than a Hyper-V enabled Server Core build.

And if only VMware would support PXE booting ESXi, you could couple it with Lance's birth client and midwife or our statelesx, and you don't even need the 'maintenance OS'. You get an environment that is almost identical conceptually, but can be deployed much much faster due to the ~150MB or so total size of ESXi (including Windows, Linux and Solaris vmtools iso's and customised configuration to enable kernel mode options like swap or LVM.EnableResignature within ESXi that would otherwise require a reboot (chicken, meet egg :-)) versus near a GB of Windows PE and Hyper-V with Server Core. Of course I don't even need to go into the superiority of ESXi over Hyper-V ;-)

With Windows Server 2008 R2 earmarked for a 2010 release, it will be sometime before the sweet deployment scenario outlined in that video is available to Microsoft customers (gotta love how Microsoft get the good stuff to themselves first - jeez, what a convenient way to ensure your cloud service is much more efficient than anything your customer could build with your own technology). But by that time they will have a helluva set of best practice deployment knowledge for liquid infrastructure like this, and you can bet aspects of their "fabric controller" will find their way into the System Center management suite.

The liquid compute deployment gauntlet has been thrown VMware, time to step up to the plate.

Sunday 9 November 2008

VMware: Unshackle ESXi, or Follow Microsoft Into the Cloud...

One of the things that will be interesting to observe as the cloud matures will the influence the internal and external clouds will have on each on each other.

To this end, I think Microsoft have benefited from years of experience with the web hosting industry, and as a result have completely nailed the correct approach for them to take into the cloud. VMware doesn't have the benefit of this experience, and should watch Microsoft closely as the key to their strategy has more in common with what VMware needs to be successful than one might think. That key being cost.

For years, Microsoft never made a dent in the web hosting space. It would be naive to attribute this solely to the stability and security of the platform - IIS 5 had a few howlers sure, but so has Apache over the same time. IIS 6 however was entirely different - in it's default configuration, not a single remotely exploitable vulnerability has been publicly identified to date. But there was the cost angle - Windows based hosting charges were far greater than comparable Linux based offerings, and they pretty much stayed that way until SWsoft came along with Virtuozzo for Windows in 2005. The massive advantage Virtuozzo had (and still has) over other Virtualisation offerings from a cost perspective is that only a single Windows license is required for the base OS - all the containers running on top of that base don't cost anything more. Now all of a sudden web hosting companies could apply the same techniques to Windows as they had used for Linux based platforms for years, and achieve massive cost reductions.

I can see a similar problem for VMware in the external cloud space. Let's compare 2 companies currently offering infrastructure based cloud services - Terremark and Amazon.

Terremark's 'Infinistructure' is based (among other things) on hardware from HP, a hypervisor from VMware (although it's not clear if this is the only hypervisor available), all managed by an in house developed application called digitalOps which apparently had a developmemt cost of over $10mil USD to date.

Amazon's EC2 on the other hand uses custom built hardware from commodity components, uses the free open source Xen (and likely their own version of Linux for Dom0), and of course a fully developed management API based on open web standards.

Comparing those 2, which do you think will _always_ cost more? If VMware want to seriously compete in the external cloud space, they need to address this. Right now I see 2 options for them. One is to unshackle the free version of ESXi, so that the API is fully functional and hence 3rd parties could write their own management tools to sit on top and not have to pay for the VMware tools (and then have to write their own stuff anyway, as Terremark have done). The other is for VMware to enter the hosting space themselves, as they would be the only company in a position to avoid paying software licenses for ESX and their VI management layer.

I'm sure VMware realise they only have a limited time in which to establish themselves in this space, because 2 trends are in motion that have the potential to render the operating system insignificant, let alone the underlying hypervisor: application virtualisation, and cloud aware web based applications. But if will likely be some time before either of those 2 will be adopted broadly in the enterprise, and hence VMware are pushing the fact that their take on cloud is heavily infrastructure focused, and thus doesn't require any changes in the application layer.

Sure, unshackling free ESXi would mean missing out on some revenue from external cloud providers, but it will go a long way towards insulating them from any external cloud influence creeping into the internal cloud. What do I mean by that? Say I was a CIO, and I had 2 external cloud providers vying for my business. While they both offer identical SLA's surrounding performance and availability, one of them offers a near seamless internal/external cloud transition based on the software stack in my internal cloud. It is however 3 times the cost of the other provider. After signing a short-term agreement with the more expensive cloud provider, I would be straight on the phone to my directs asking for an investigation into the internal cloud, with a view to making it more compatible with the cheaper external offering.

Which is why Microsoft have got the play right. They know that no 3rd party host can pay for Windows and compete with Linux on price. So instead of go after it with Hyper-V on an infrastructure level, they're taking a longer term approach and going after the cloud aware web based application market. Personally, I can't ever see VMware becoming a cloud host, which means they need to do something about unshackling ESXi. And fast - the clock is ticking.

To this end, I think Microsoft have benefited from years of experience with the web hosting industry, and as a result have completely nailed the correct approach for them to take into the cloud. VMware doesn't have the benefit of this experience, and should watch Microsoft closely as the key to their strategy has more in common with what VMware needs to be successful than one might think. That key being cost.

For years, Microsoft never made a dent in the web hosting space. It would be naive to attribute this solely to the stability and security of the platform - IIS 5 had a few howlers sure, but so has Apache over the same time. IIS 6 however was entirely different - in it's default configuration, not a single remotely exploitable vulnerability has been publicly identified to date. But there was the cost angle - Windows based hosting charges were far greater than comparable Linux based offerings, and they pretty much stayed that way until SWsoft came along with Virtuozzo for Windows in 2005. The massive advantage Virtuozzo had (and still has) over other Virtualisation offerings from a cost perspective is that only a single Windows license is required for the base OS - all the containers running on top of that base don't cost anything more. Now all of a sudden web hosting companies could apply the same techniques to Windows as they had used for Linux based platforms for years, and achieve massive cost reductions.

I can see a similar problem for VMware in the external cloud space. Let's compare 2 companies currently offering infrastructure based cloud services - Terremark and Amazon.

Terremark's 'Infinistructure' is based (among other things) on hardware from HP, a hypervisor from VMware (although it's not clear if this is the only hypervisor available), all managed by an in house developed application called digitalOps which apparently had a developmemt cost of over $10mil USD to date.

Amazon's EC2 on the other hand uses custom built hardware from commodity components, uses the free open source Xen (and likely their own version of Linux for Dom0), and of course a fully developed management API based on open web standards.

Comparing those 2, which do you think will _always_ cost more? If VMware want to seriously compete in the external cloud space, they need to address this. Right now I see 2 options for them. One is to unshackle the free version of ESXi, so that the API is fully functional and hence 3rd parties could write their own management tools to sit on top and not have to pay for the VMware tools (and then have to write their own stuff anyway, as Terremark have done). The other is for VMware to enter the hosting space themselves, as they would be the only company in a position to avoid paying software licenses for ESX and their VI management layer.

I'm sure VMware realise they only have a limited time in which to establish themselves in this space, because 2 trends are in motion that have the potential to render the operating system insignificant, let alone the underlying hypervisor: application virtualisation, and cloud aware web based applications. But if will likely be some time before either of those 2 will be adopted broadly in the enterprise, and hence VMware are pushing the fact that their take on cloud is heavily infrastructure focused, and thus doesn't require any changes in the application layer.

Sure, unshackling free ESXi would mean missing out on some revenue from external cloud providers, but it will go a long way towards insulating them from any external cloud influence creeping into the internal cloud. What do I mean by that? Say I was a CIO, and I had 2 external cloud providers vying for my business. While they both offer identical SLA's surrounding performance and availability, one of them offers a near seamless internal/external cloud transition based on the software stack in my internal cloud. It is however 3 times the cost of the other provider. After signing a short-term agreement with the more expensive cloud provider, I would be straight on the phone to my directs asking for an investigation into the internal cloud, with a view to making it more compatible with the cheaper external offering.

Which is why Microsoft have got the play right. They know that no 3rd party host can pay for Windows and compete with Linux on price. So instead of go after it with Hyper-V on an infrastructure level, they're taking a longer term approach and going after the cloud aware web based application market. Personally, I can't ever see VMware becoming a cloud host, which means they need to do something about unshackling ESXi. And fast - the clock is ticking.

Thursday 30 October 2008

ESX 3.5 Update 3 Release Imminent!

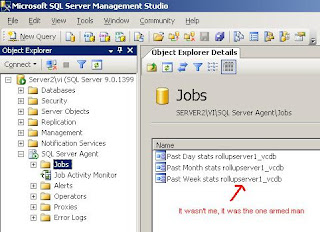

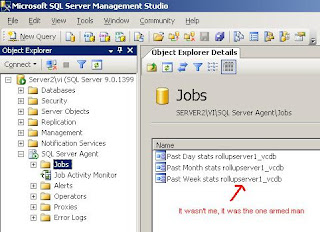

It seems the ESX 3.5 Update 3 release is imminent, as per some recent updates to the VMware website (screeny below)

Wonder if we'll get any timebomb action this time :D

Wonder if we'll get any timebomb action this time :D

Monday 27 October 2008

Transcript of Best VMTN Community Roundtable Yet!

Hooo-rah! to Rod Haywood for transcribing VMTN Community Roundtable #22, which was in my mind the best roundtable yet (you got Krusty sized shoes to fill next week Chad :-). Bill Shelton was bang on point with just about everything he said. If you've spent any time with the guts of the SDK you'll know just how inconsistent it can be. In fact a while back I asked Carter and Steve how come the API was so quirky with guys like them working at VMware who clearly know better (otherwise they wouldn't have felt the need to write their respective wrappers). Their answer? "We've only been here for about a year." Bill Shelton falls into that category as well. Knowing how people think in those kinds of positions at VMware is very comforting indeed. I wonder what the Windows Azure API will look like. Actually no, I don't even care.

Sunday 26 October 2008

Clouds. Plenty of Them Around This Sunday Afternoon...

... and I'm not just talking about London. The topic of cloud or liquid or utility compute is perfectly suited for an edition of Sunday Afternoon Architecture & Philosophy.

Note that I said compute there. Indeed the cloud has many other components, but infrastructure guys like me will mainly be concerned with compute. And maybe storage. And of course what connects things to either of those 2. Hmmm. OK, maybe it's more than just compute, but for all intents and purposes I'm going to try and stay focused on compute.

So lets talk about apps. See what I did there? At the end of the day, compute is only good for one thing - running apps. But I can't see us getting to this fluffy land of external federated clouds without a fundamental change to how most applications in an enterprise environment run. Now don't get me wrong - the whole point of cloud compute from infrastructure up is that we don't have to change the apps. I get that. But in reality, they're going to have to change - they have to become portable, and any state needs to move out of the endpoint and into somewhere central. Why? Because it's gonna be a looooong time before _data_ is held in an external cloud, if ever IMHO. As Tim O'Reilly points out in his recent post Web 2.0 and Cloud Computing:

Cloud compute means exactly as the name implies. COMPUTE. As in run up a compute engine (ie. an OS + App + State stack), throw some data at it, get some data back, job done. At no point did that data originate from, persist in (for any meaningful amount of time), or return to the external cloud. The security, availability and integrity of that data during transit and processing is by no means trivial, but compared to storing that data in the external cloud it is.

Which leads me in a roundabout way back to why apps need to change in order for internal compute clouds to reach their full potential and for external compute clouds to become really viable. Apps need to be delivered predictably and efficiently, that we may throw data at them. Whether that is achieved by virtualising them, streaming them, packaging them the traditional way, the choice is yours. But start thinking about it now, lest your clouds do nothing but rain on you.

Note that I said compute there. Indeed the cloud has many other components, but infrastructure guys like me will mainly be concerned with compute. And maybe storage. And of course what connects things to either of those 2. Hmmm. OK, maybe it's more than just compute, but for all intents and purposes I'm going to try and stay focused on compute.

So lets talk about apps. See what I did there? At the end of the day, compute is only good for one thing - running apps. But I can't see us getting to this fluffy land of external federated clouds without a fundamental change to how most applications in an enterprise environment run. Now don't get me wrong - the whole point of cloud compute from infrastructure up is that we don't have to change the apps. I get that. But in reality, they're going to have to change - they have to become portable, and any state needs to move out of the endpoint and into somewhere central. Why? Because it's gonna be a looooong time before _data_ is held in an external cloud, if ever IMHO. As Tim O'Reilly points out in his recent post Web 2.0 and Cloud Computing: